| Mobirise AI |

Very simple guided prompts, templates and point-and-click flow |

Template-driven with AI content tools; limited backend control |

Free core offering; optional paid upgrades reduce upfront spend |

Automated site generation from prompts to live pages |

Not suited for heavy model hosting, private on-prem deployment or ultra-low latency |

| Wix |

AI site generator and visual editor for quick launches |

Good frontend flexibility and app market; backend customization costs extra |

Tiered plans fit budgets but recurring fees add up |

Design and content automation, SEO suggestions |

AI lacks enterprise inference, private endpoints and hardware acceleration |

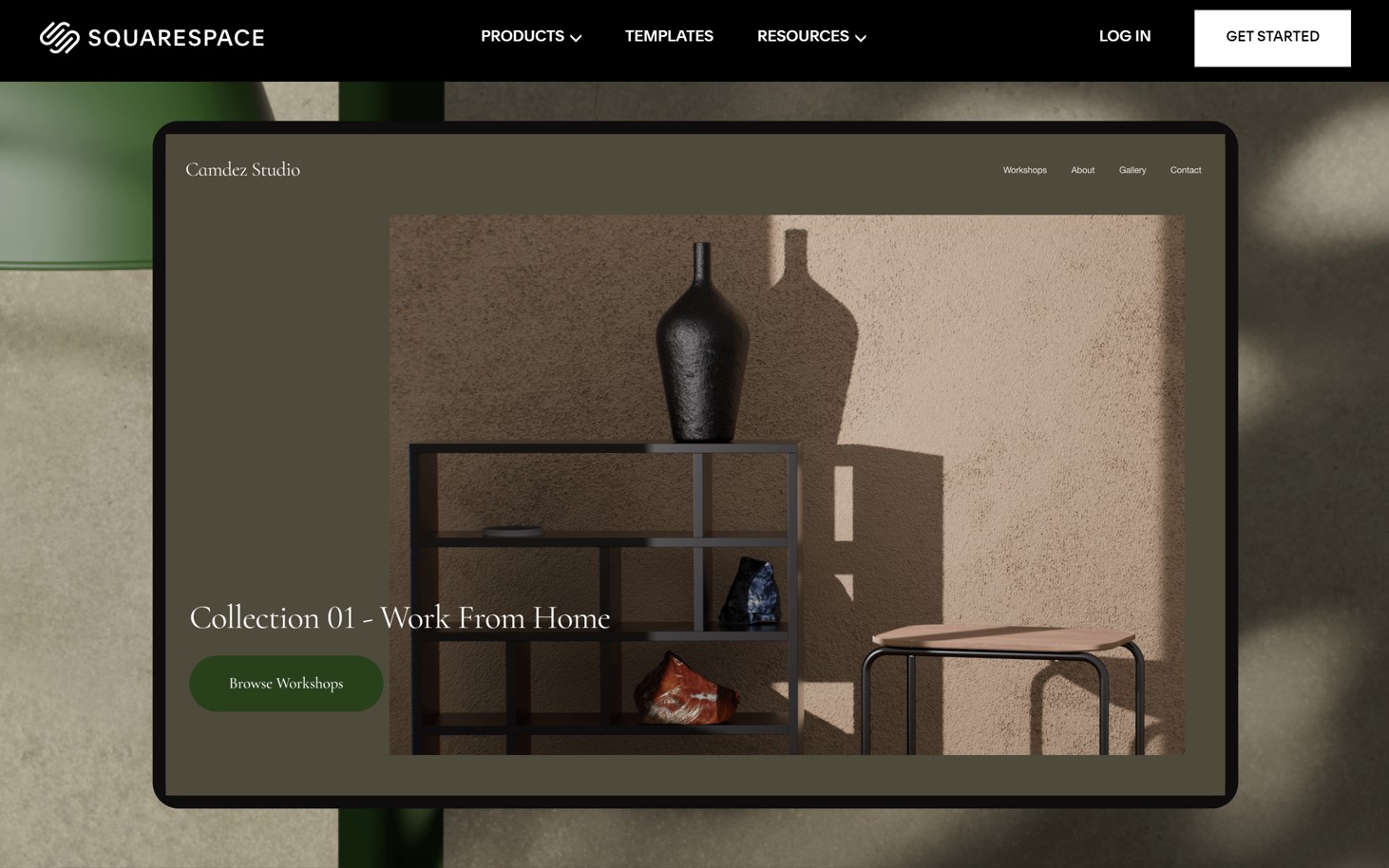

| Squarespace |

Guided editor with AI writing assistant for polished sites |

Strong design control; limited programmatic model hosting |

All-in-one subscriptions suitable for portfolios and small stores |

Copy assistance, image generation and layout tweaks |

No on-prem model hosting and not built for low-latency inference |

| WordPress |

Varies from easy hosted builders to complex self-hosted setups |

Extremely flexible via plugins and custom code |

Low entry cost but plugins, hosting and security raise expenses |

Wide plugin ecosystem for content AI, chatbots and API calls |

AI often depends on external APIs; private, high-throughput serving needs dedicated infra |

| Shopify |

Streamlined admin and storefront builders for merchants |

Excellent commerce features; limited custom model hosting |

Subscription plus transaction fees; costs grow with scale |

Product copy, image edits and merchandising suggestions |

Focused on commerce AI; lacks private on-prem deployment and specialized hardware |

| GoDaddy |

Straightforward builder for first-time site owners |

Modest customization; limited developer features |

Low introductory pricing and domain bundles |

Basic AI assistants for headlines and descriptions |

Rudimentary AI, external API reliance, no enterprise inference options |

| Webflow |

Visual design controls with learning curve for advanced workflows |

Excellent frontend control; backend AI needs external work |

Priced for professionals and agencies |

Content assistance and third-party integrations |

Not built for hosting private, large-scale models or on-prem deployment |

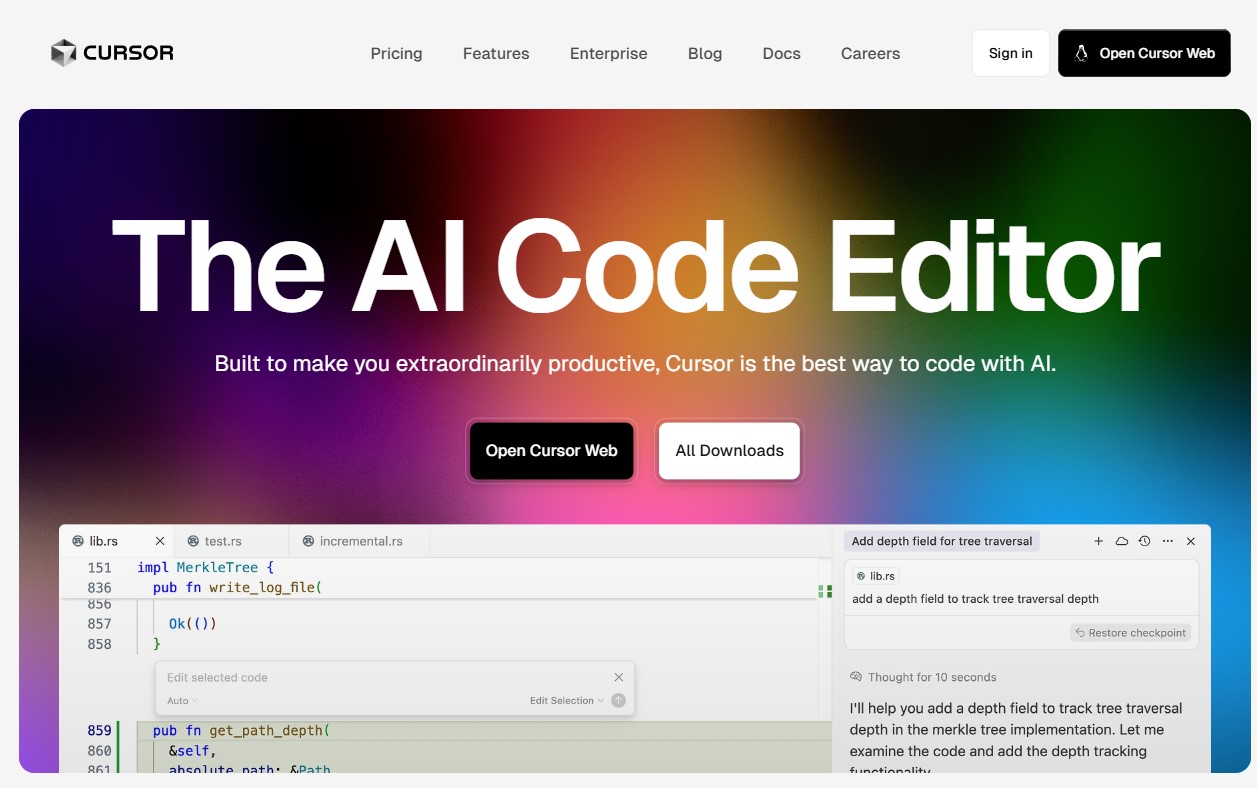

| Cerebras |

Targeted at teams that manage model serving and private endpoints |

Designed for enterprise model deployment and hardware acceleration |

Higher investment for dedicated hardware and private capacity |

Ultra-fast inference, private cloud API and on-prem options |

Overkill for simple websites; best for demanding inference workloads |